AI-Driven Infrastructure Compilers: A Thought Experiment in Domain-Specific Software Architecture

The Problem with General-Purpose AI Coding for Infrastructure

Today's AI code generation tools work for almost any purpose you can think of in virtually any programming language. AI also works well when you want to explore a path forward, for example, how to create a web server in Rust or how to create a multi-read, single-write queue in Swift. The more you ask, the more code AI creates for you. But, is this code really production-ready? Does it contain security vulnerabilities or violate architectural best practices?

When it comes to foundational infrastructure for your app or service, general-purpose AI code generation tools fall short. What we really need is specialized intelligence built for specific infrastructure domains.

I started thinking about this problem when planning several iOS apps with overlapping infrastructure needs. I didn't want common code that might go unused - I wanted to describe my infrastructure requirements and get specific, tailored code for each app. This led me to explore a concept I'm calling AI-Driven Infrastructure Compilers. It's still early thinking, but I believe there's something worth investigating here.

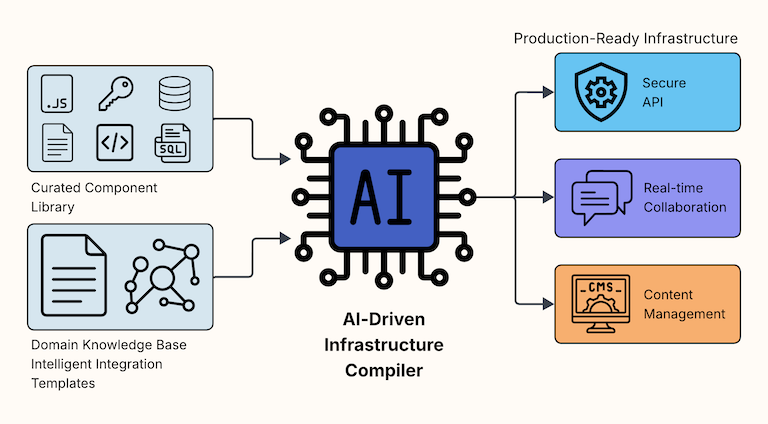

Introducing AI-Driven Infrastructure Compilers

AI-Driven Infrastructure Compilers represent a fundamentally different approach: purpose-built systems that generate production-ready infrastructure for specific application domains by intelligently selecting and wiring together battle-tested, pre-validated components using code generation. Only what you need is compiled into the infrastructure codebase, void of unnecessary and unused code, while reducing complexity and making the code easier to understand and update.

Instead of one massive AI trying to understand all possible architectures, imagine specialized compilers:

- "Secure API" Compiler: Specializes in TypeScript/Node.js ecosystems, understands modern authentication (OAuth, WebAuthn), generates microservices

- "Real-time Collaboration" Compiler: Built for React + WebRTC, handles operational transforms and conflict resolution for collaborative applications

- "Content Management" Compiler: Headless CMS + static site generation, handles content workflows and deployment pipelines

Each compiler is an expert in one domain, with deep knowledge of proven components and architectural patterns specific to that use case.

The Domain-Specific Advantage

This approach solves fundamental problems with general-purpose AI coding:

Constrained Solution Space

Instead of choosing between thousands of possible frameworks, a "Secure API" compiler works with a curated set of battle-tested components for its chosen technology stack. The AI doesn't waste cycles evaluating unsuitable options.

Domain Intelligence

The compiler knows that if you choose microservices, you'll need service discovery. If you require HIPAA compliance, it applies specific security configurations. This knowledge is curated specifically for this compiler—different teams and companies will build their own "Secure API" compilers with their preferred component choices and architectural opinions.

Deterministic Output

The same requirements within a domain produce identical infrastructure. Your "secure messaging API with rate limiting" always generates the same proven architecture.

Focused Prompting

Instead of "build me an app," you get domain-specific questions: "Authentication method? Database preference? Expected concurrent users? Compliance requirements?"

How It Works: The Modern API Example

Consider requesting a "secure API with content management":

Traditional AI Approach:

- Generates custom TypeScript/Node.js configurations from scratch

- May choose outdated authentication practices

- Creates novel CMS implementations

- Requires extensive security and workflow review

AI-Driven Infrastructure Compiler Approach:

- Asks domain-specific questions: "OAuth or WebAuthn? Headless CMS preference? Static site generation needed?"

- Selects from pre-validated TypeScript/Node.js API frameworks + CMS configurations

- Applies hardened authentication libraries and proven content workflows

- Outputs production-ready infrastructure code tailored to the domain's needs

Inside an Infrastructure Compiler

Each domain-specific compiler consists of:

Curated Component Library

Battle-tested, security-audited building blocks specific to the domain. The "Secure API" compiler includes proven TypeScript/Node.js API frameworks, OAuth libraries, CMS connectors—not graphics rendering or blockchain libraries.

Domain Knowledge Base

Architectural patterns, security requirements, and integration approaches specific to the use case. Financial systems require audit trails; real-time applications need conflict resolution; REST APIs require proper error handling.

Intelligent Integration Templates

Pre-tested combinations of proven components that have been validated together in various configurations. The AI-Driven domain-specific compiler doesn't just drop in boilerplate code—it generates architecture-specific implementations that are intentionally designed. For example, when you need XChaCha20 payload encryption, the compiler doesn't wire in a generic crypto library like a block component. Instead, it generates integrated code where the encryption appears purpose-built for your specific RESTful service architecture, with proper error handling, key management, and performance optimizations that match your chosen components.

Intelligent Assembly

AI trained specifically on the patterns of one domain, understanding the relationships between components and the implications of different architectural choices within that context.

The Code Generation Breakthrough

The real innovation opportunity lies in the code generation approach. AI democratizes architecture by interpreting vast amounts of code and architectural knowledge. While this enables anyone to build systems, businesses running critical infrastructure need more than interpreted patterns—they need the curated wisdom of experienced architects who understand not just what works, but why it works and when it fails.

Traditional generators that copy the same code structure for every project are too rigid, while pure AI generation creates unpredictable code. The sweet spot is expert-guided generation: human architects define the architectural patterns and component relationships, then the system generates the specific code needed—no unused boilerplate, no over-abstraction, just the infrastructure you need.

We've been building cars for over 100 years, yet every model still requires human expertise to design the architecture and select proven components. Software infrastructure is no different—we shouldn't wait for AI to rediscover what experienced architects already know works. The purpose of infrastructure compilers is to leverage what AI excels at (rapid assembly, configuration management, and code generation) while combining it with what human architects do best (understanding trade-offs, curating components, and encoding domain-specific knowledge).

Beyond Code: Intelligent Documentation Generation

Each compiler generates not just code, but comprehensive documentation that enables future AI interaction:

- System Architecture: What subsystems exist and how they connect

- Configuration Rationale: Why specific choices were made

- Extension Points: How the system can be safely modified

- Operational Requirements: Deployment, monitoring, and maintenance needs

The Compiler Marketplace

A crucial insight: there won't be one "Secure API" compiler—there will be hundreds, each with its own opinionated architecture and technology stack. This provides product builders with choices that strike a balance between speed to market and trusted infrastructure.

Consider the possibilities:

Enterprise Internal Compilers

While a financial services firm's "Secure API" compiler prioritizes security, a streaming company's "Secure API" compiler must also optimize for performance.

I learned this firsthand at Traffic.com in 2001, where our traffic data was updated every two minutes, and I had to build all the caching infrastructure from scratch. Today, there are dozens of proven caching strategies across different tech stacks. That experience helped shape my thinking—this feels exactly like the kind of scenario where a domain-specific compiler would shine.

Vendor-Optimized Compilers

Cloud providers create compilers tailored to their specific ecosystems. The "AWS Secure API Compiler" generates different infrastructure than the "Google Cloud" or "Azure" versions, each leveraging their platform's strengths.

Open Source Community Compilers

Different architectural schools develop competing open-source compilers. The "Microservices-First Compiler" generates different patterns than the "Modular Monolith Compiler."

Specialized Industry Compilers

Healthcare organizations require HIPAA-compliant configurations; fintech companies need secure payment processing; and government contractors need to comply with federal security regulations. Each creates domain-specific variants.

Consultant and Agency Compilers

Development firms build compilers that embody their expertise and preferred stacks, creating competitive differentiation in how quickly they can deliver proven solutions. This marketplace dynamic makes AI-Driven Infrastructure Compilers both more achievable (you don't need to solve everything) and more valuable (specialization commands premium pricing). Competition drives innovation in component curation, architectural patterns, and domain expertise encoding.

The question isn't who will build the compiler for each domain—it's who will build the best compiler for specific use cases, industries, and architectural philosophies.

Domain-specific compilers succeed where general AI fails because:

- Expertise Depth: Deep knowledge of one domain beats shallow understanding of everything

- Reduced Complexity: Smaller solution space means better decisions

- Proven Patterns: Components and architectures have been validated in real-world usage

- Predictable Behavior: Deterministic outputs enable reliable deployment and maintenance

The Innovation Challenge: Learning vs. Encoding

Here's the real tension: How do we ensure that AI systems reflect emerging architectural innovations before those ideas become stale, commoditized, or stripped of context?

General AI faces an inherent challenge: it learns from past code and averages existing patterns. When a major streaming company develops a breakthrough caching architecture, or when a fintech startup pioneers a new security pattern, how does that innovation make it into AI's knowledge base? The training cycle is slow, and by the time innovative patterns are widely adopted enough to influence AI training, they're no longer innovations—they're standard practice.

But here's the bigger problem: even if AI learned these patterns, how would you access them? Can you ask ChatGPT today "build me infrastructure using the same patterns that successful companies in this space use" and get their actual architectural approaches? Of course not. That knowledge rarely exists in public form—let alone in a way AI can meaningfully learn from.

And why would successful companies ever share their architectural secrets for AI training? They lose all control over how that knowledge gets used and gain nothing in return.

Domain-specific compilers offer a different model: companies can choose to share specific architectural patterns while maintaining control over how they're used. A streaming company could create a "High-Performance Video Delivery" compiler that captures their innovations without exposing proprietary details. They control what's shared, how it's configured, and potentially even monetize their architectural expertise.

This isn't just about speed—it's about creating an ecosystem where innovation can be shared strategically rather than scraped indiscriminately.

Why This Works Better

Organizations benefit from:

- Faster Time-to-Market: Skip the research, the compiler knows the best practices for your domain

- Built-in Security: Components are pre-hardened, and configurations follow security best practices

- Reduced Risk: Proven architectures have known operational characteristics

- Easier Maintenance: Standard patterns with established operational procedures

Looking Forward

The future of infrastructure development lies not in AI systems that attempt to understand everything, but in specialized compilers that thoroughly understand specific domains deeply. As these tools mature, we'll see:

- Ecosystem Development: Component libraries optimized for compiler consumption

- Domain Expertise Encoding: Architectural knowledge captured in reusable, intelligent systems

- Faster Innovation: Developers focus on business logic while compilers handle infrastructure complexity

Just like a regular compiler transforms code into something reliable, infrastructure compilers transform your intent into infrastructure that works.

General AI is like the Model T of coding - democratizing access but not optimized for today's performance and security demands. Infrastructure compilers may be the next evolution: specialized systems that go beyond accessibility toward precision.

But here's where it gets interesting: maybe this isn't an either/or future. General AI may eventually learn to discover and leverage these infrastructure compilers, orchestrating them in ways we haven't imagined yet. That would contradict my current thinking about keeping them separate—and honestly, I'm not sure how this will play out. The technology is evolving too fast for anyone to predict with certainty.

What I do know is that right now, we need better solutions for production infrastructure than what general AI provides. Whether that leads to permanent specialization or a new form of AI-orchestrated composition remains to be seen. My guess is we'll see both approaches coexist, with specialization winning where precision and reliability matter most.

This is just the beginning. I'm building early versions of this idea now and learning as I go. If you've been thinking about similar problems—or want to build one of these compilers—I'd love to connect and compare notes. I'm particularly curious: does this address a real challenge you're seeing in your work, or am I solving a problem that doesn't exist?